Did you know that up to 80% of your website’s crawl budget might be wasted on unnecessary, low-quality, or duplicate content? Google fixes the number of URLs that will be checked in a specific period for each website (crawl budget), and lack of optimization of the site structure may lead to wastage of the budget.

According to a study by Ahrefs, Googlebot crawls only about 16% of the total web pages it knows about, prioritizing those most likely to be useful to users and relevant to search queries.

This makes it important to understand crawling in detail and optimize your website so that Google can crawl it most efficiently. Crawling is the process by which a search engine analyzes your website’s content and pages, and decides to index it, leading to rankings.

So, when a website goes live, and asks Google to check the content of that website. Google sends its crawlers to discover the website, its pages, and the content in them. Crawlers start to check the content of the website. They start to follow the links present on one page to go to another one. This process of visiting from one page to another to skim the content is called Crawling in Search Engine.

This blog will dive deep into the key concepts of crawling, and suggest measures to improve crawlability for improving SEO.

What Is Crawler in SEO/Spiders In SEO?

To understand spiders and crawlers, you must understand the basic architecture of how a page ends up on the user’s screen. For example, when your website uploads a new blog that you want to be seen by Google (by using appropriate meta tags), Google dispatches ‘spiders’ or ‘crawlers’ which are robots that visit your website, explore the new content through links pointing to it and store it in their database.

After they are stored in the database (the index), the new blog will be said to have been indexed. This means that this web page can now appear in the search results. If your page isn’t indexed, it cannot appear in search results.

Google can learn about new pages in various ways. The most common ones are internal and external links, social media links, manual submission through Google Search Console, and inclusion on your provided sitemap.

A thing to note is that Google isn’t constantly crawling your website, the frequency of crawls depends on many factors, discussed thoroughly below.

Why is Crawling in Digital Marketing Important?

Crawling is Google’s (or any other search engine’s) way of exploring your content. Your web pages are not public by default so to speak, and only after this exploration and their successful inclusion in the index can they appear in search results.

However, being indexed doesn’t mean your page will appear in the top rankings of SERPs (Search Engine Result Pages). It only means that now you are eligible to be ranked. Your ranking still depends upon the quality of your content, and how well-optimized it is.

This is why improving crawlability is important. All the effort you have made in creating high-quality content can go to waste if you ignore crawlability, which is one of the most important aspects of technical SEO.

Some Key Concepts of Crawling in Digital Marketing

Now that we have a good idea of what crawling is, we can build on that understanding by focusing on some key concepts in the crawling process.

These concepts are:

- Crawl Budget – Crawl budget is the number of pages on a website that search engine crawler bots crawl within a specific timeframe. This amount is determined by factoring in site authority, frequency of content updating, site size, and server performance.

It’s for this reason that news websites and extensive and authoritative websites are crawled much more frequently than other sites.

- Robots.txt – Robots.txt is a plain text file placed in the root directory of your website that dictates how automated agents such as crawlers or spiders interact with it. You can use it to allow or disallow certain web pages to be crawled.

It is public and anyone can see any website’s robots.txt file with this format: www.example.com/robots.txt

- Sitemap – A sitemap is a file that lists the URLs on a website, helping search engines discover them and index them more efficiently. It is a blueprint structure of your website that facilitates searching and indexing of the most important web pages.

- User-Agent – This is the identifier tag for the bot crawling your website. There are many bots by Google such as Googlebot, Googlebot-Ads, Googlebot-News, etc. These bots have different ‘user-agent’ identities for your website. For example, Googlebot identifies itself as ‘Googlebot’.

- Crawl Rate – The crawl rate is the speed at which pages are requested and retrieved by the crawler for a particular website. It depends upon site performance and load-bearing capabilities of that website. Crawlers adjust their rate of exploration depending on the site load.

- Crawl Delay – Crawl delay is the delay between subsequent requests from a crawler. You can set this delay through your robots.txt file. Most crawlers respect this delay and slow their crawling so that no extra load is put on your website.

- Crawl Depth – Crawl depth is the number of clicks/links that it takes to reach a certain page from the homepage. The greater the crawl depth, the more infrequent the crawling process for that page. This is why complex internal linking in multiple layers should be avoided.

- Meta Tags – Meta tags are HTML tags that tell a crawler how to interact with links and web pages. For example, the tag ‘nofollow’ attached to a link tells a crawler to ignore the link for crawling purposes. Similarly, ‘index’ or ‘noindex’ controls indexing.

What is the Difference Between Crawling and Scraping?

Crawling in SEO is the process by which search engine bots discover and index the web pages on your website. Millions of bots, also known as spiders, are constantly browsing the web looking for pointers towards new pages.

Once they find such a pointer, they crawl the new page and add it to their index, making it ready to appear in the search engine results.

On the other hand, scraping is the phenomenon of gathering data from various websites about a certain topic. It is usually very narrow focused, mainly targeting a certain piece of information available on many different websites. The objective of scraping is to aggregate data from various websites for analysis, or simply to display it together. For example, price tracker websites scrape the entire web for the price of a certain item to display the best price available on any e-commerce store.

What is the Difference Between Crawling and Indexing in SEO?

Crawling and indexing are inherently related. Crawling leads to indexing, and indexing leads to ranking. As ranking content at the top in SERPs is the primary goal of SEO activities, crawling becomes the basic building block of SEO.

Once crawler bots pick up on a new page, they crawl it thoroughly looking out for spammy content, low quality pages, technical errors, server issues, etc. These are the factors that can prevent your page from being indexed. However, if all this is well managed, then the crawler bots add the web page to the index, making it ready to be seen by end users.

Crawling vs Indexing

While closely tied up together, comparing and contrasting crawling and indexing can help us fill the small gaps and come to a concrete outlook.

The main differences are:

- Crawling is about discovering pages, while indexing is about organizing and storing them.

- Crawlers fetch the content and make it available to the web, and indexing decides where and how to display them in the search results.

- Crawling is wholly focused on gathering new data. On the other hand, indexing tries to make sense of that data and readies the best data for the end-users.

How can I improve Crawling in Google?

Making improvements in your crawlability can lead to faster indexation of your new pages, and better rankings for your pages throughout. Some ways you can improve crawl performance on your website are:

- Reduce Crawl Depth – Crawl depth of a certain web page is the number of links/clicks it takes from the home page to get there. To ensure efficient crawling, try to make your site’s structure shallow, i.e., make all pages reachable from the home page within 3 to 4 clicks.

- Improve Internal Linking – Links are how crawlers are pointed towards new pages most frequently. Make sure to follow a structural hierarchy while internally linking pages. The pillar or category pages should lead to sub-pages, and the links should be marked with keyword-rich anchor texts to help Google understand the relevance of the pages.

- Enhance Mobile-Friendliness – Over 70% of websites have been moved to mobile-first indexing as of 2020, meaning that Google primarily uses the mobile version of a website for crawling and indexing. Hence, make sure to optimize your webpages for mobile viewership. Google Search Console has many tools that can help you increase mobile-friendliness.

- Optimize Sitemap – Create an XML sitemap that includes the most important pages on your website, and submit it on the Google Search Console. A sitemap serves as a blueprint of your website, helping crawlers discover content more easily. Regularly update your sitemap to keep it ready for crawling.

- Optimize Page Load Speed – Use accelerated mobile pages (AMP), optimize images, videos, and other resources on your webpage to load faster. Consider a CDN (Content Delivery Network) to help your page load faster. Faster loading times mean crawlers can access your web pages more efficiently.

Why Crawlers can Reject Your Website from Indexing (Crawl Errors)?

Many factors can lead to your pages not being crawled correctly. As a website owner, it is essential to stay on top of these crawl errors and ensure optimal crawlability, otherwise your website’s performance may continue to decline.

- Manual ‘No Crawl’ Directions – If your Robots.txt file has disallowed certain pages to be crawled, a crawler will ignore them. Similarly ‘noindex’ and ‘nofollow’ tags on pages and links respectively will be ignored by crawlers.

Ensure that these instructions are only used on the pages you don’t want to index, such as admin pages or low-quality content. - 5xx Errors – If the server hosting your website is down or experiencing errors, a crawler cannot access your webpages, leading to abandonment of the crawl. If these errors and downtimes happen frequently, Google can reduce your crawl budget and lead to decreased rankings and late indexing of content.

- 404 Errors – If your links are broken and correct URLs are not linked to other pages, a 404 error may be encountered by the crawlers. Increased frequency of these errors may lead to decrease in the overall crawlability of your website, and reduction in the crawl frequency and budget for your website.

- Page Loading Issues – If your page takes a long time to load, crawlers may abandon it or reduce the frequency at which they crawl your website. Both these facts harm the overall crawlability of your web pages and your SERP rankings.

- Excessive Redirects – Excessive redirects lead to wastage of the crawl budget. Redirect chains and loops may confuse the crawlers and make crawling inefficient. Ensure to keep linking structurally sound.

How Often Does Google Crawl Your Website?

How frequently Google decides to crawl your website depends on a lot of factors, but more frequent crawling is always a good sign, as it leads to your content being discovered and ranked quickly. This frequency is called the crawl rate for your website.

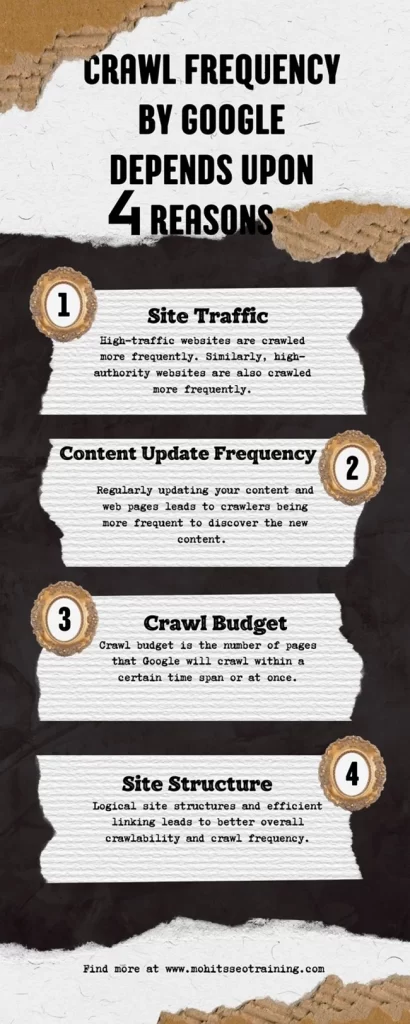

Crawl frequency by Google depends upon:

- Site Traffic – High-traffic websites are crawled more frequently. Similarly, high-authority websites are also crawled more frequently. This is why news websites may be crawled up to several times a day.

- Content Update Frequency – Regularly updating your content and web pages leads to crawlers being more frequent to discover the new content. This is why websites posting daily updates, news snippets, etc. are crawled more frequently.

- Crawl Budget – Crawl budget is the number of pages that Google will crawl within a certain time span or at once. High-traffic and high-authority websites have a higher crawl budget.

Prioritizing important pages through the sitemap and robots.txt can help you ensure that important pages are crawled and indexed first.

- Site Structure – Logical site structures and efficient linking leads to better overall crawlability and crawl frequency. Efficient prioritization of content can also lead to better outcomes in crawling.

How to Limit Crawling (And When Should You Do It?)

Some situations that demand you to limit crawling are:

- Server load issues due to excessive crawling.

- Preventing low-value or private content from public viewership.

- Prevention of crawling during site maintenance activities.

- Avoiding the wastage of crawl budgets.

Measures you can use to limit crawling in these scenarios are:

- Using robots.txt file to disallow certain pages to be crawled and indexed. You can use it to disallow certain sections, pages, or file types. (Such as admin content, expired content, etc.).

- Noindex and Nofollow meta tags prevent specific pages from being indexed or followed by search engines. You can use them to prevent certain pages or sections from being discovered.

- You can also use robots.txt to set a crawl delay for your website. Most search engines honor this crawl delay and adjust their crawl rate accordingly.

- Implement canonical tags to indicate the preferred version of a page when multiple versions exist, helping to consolidate crawl focus on the canonical URL.

Key Takeaways

Improving crawlability is a basic hygiene that SEOs often ignore to focus on quality and on-page optimization. Sure, that stuff is very important, but without the right technical SEO optimization such as proper crawling and site structures, Google will never even discover your content, leading to a wastage of effort.

How Can We Help?

At Mohit’s SEO Training, we believe in providing the honest SEO Consulting Services. We are one of the Top SEO Consultant in Bangalore. We deal in Local SEO, International SEO, and e-Commerce SEO. Contact Us Today to order your 30 days sprint.

Along with providing SEO Consulting Services in Bangalore, we provide practical Advanced SEO Course in Bangalore.

FAQs

What is crawling and indexing?

Crawling is the process by which search engine bots discover new content and analyze it. If it meets certain quality and technical requirements, it leads to indexing, where the content is included in the search engine’s database, ready for public viewership.

Why is crawling important in SEO?

Crawling is important as it is the first step in wanting your web page to appear in top search results. If your website has good crawlability, it will be indexed faster, and will be more readily available for ranking.

What is crawling in simple words?

Google’s robots are constantly searching the web for new and updated content. This process is known as crawling.

What is the crawling process?

Search engine robots scan the web for new and updated content. If they are led to it (through links and sitemaps), they analyze it for quality and technical aspects (load speeds, mobile optimization, etc.). The web page is indexed if it meets all these requirements.

What is web scraping and crawling?

Web scraping refers to automated collection of specific data from across the web. For example- Price of the same item from various shops. On the other hand, crawling is the automated process of discovering new and updated content by bots that search engines use, called ‘crawlers’ or ‘spiders’.

Mohit Verma

I am an experienced professional with 9+ years of experience in Search Engine Optimization. I am on a mission to provide industry focused job oriented SEO so the students/mentees can get their dream SEO job and and start working from day 1.